Neural Networks — History

In this article, I want to give brief introduction to Neural Networks.

First Neural Network was designed by Frank Rosenblatt in 1957 and is called perceptron. Concept is loosely inspired from Biological neurons.

Concepts of Neural networks are loosely inspired from Biological neurons (Biological neurons are more complicated).

On highlevel, neurons contains three parts,

- Dendrites -> Dendrites collects the impulses. Size and Width of Dendrites, determine the strength of impulse

- Cell body (nucleus) -> Nucleus collect the impulses from all the Dendrites and process information

- Axoms -> Axioms will send the information to other neurons

Biological neurons cannot exist on their own but exists in networks. Output of one neuron connects to dendrites of other neurons and forms the networks

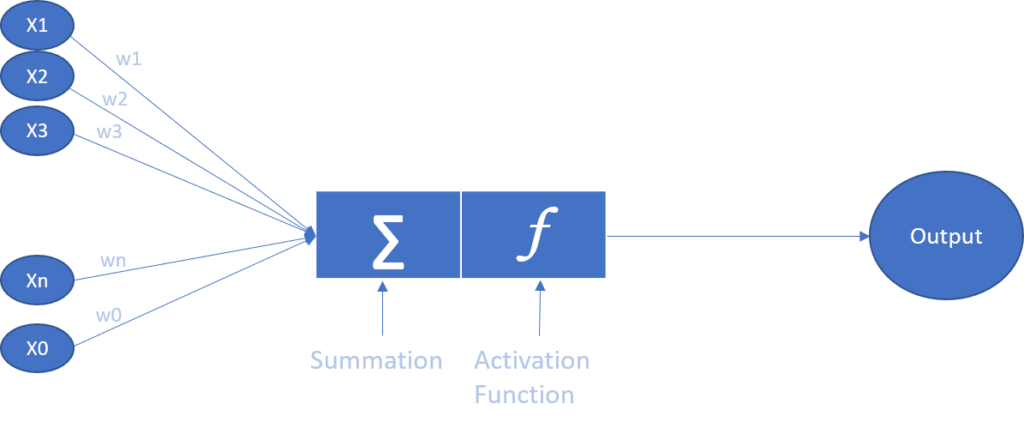

In Artificial Neural networks, we will pass inputs, x1, x2, x3,… xn with w1, w2, w3, … wn as corresponding weights to the neurons. These inputs and weights are weighted and summed and passed thru activating function. This function process the data and send the output.

In 1986, Hilton and others submitted work on how to train Artificial neural networks and is called as Back Propagation algorithm. During this time, neural networks failed when number of hidden layers (between input and output layers) are more.

Neural Networks gained popularity again in 2012 with ImageNet competition.

Today artificial neural networks are applied in different Voice assistants, Self driving cars etc.,

Hope you like this article and please share your feedback.